Computers Writing Books?

The NaNoGenMo 2019 Roundup

Each November, dozens of programmers, NLP enthusiasts, computer artists, and other folks gather together to participate in National Novel Generation Month. The goal, like NaNoWriMo, is to produce a novel; the difference is that, rather than writing the novel by hand, NaNoGenMo participants write a computer program which generates a novel. There are only two real requirements: By the end of November the program must output at least 50,000 words, and the source code must be made available.

Despite its origin as a half-joking tweet, NaNoGenMo has now run for six years, and it remains the highest-profile long-form computer writing venue around. As long-time participants know, NNGM entries aren’t typically “readable” as novels. Despite advances in AI, the dream of a computer writing passable novel-length fiction in 2019 remained just a dream.

But NaNoGenMo is still an interesting place. Freed from the constraints of traditional novel form by necessity, the computer can be used to create generative art that would be difficult or impossible for a human alone. Participants turn the 50,000 word requirement on its head, and view it as a creative limitation instead:

- What if the computer meticulously detailed traversing a file system?

- What if 50,000 words was just the content of an existing book, alphabetized?

- What if it was a book written in a generated, unreadable language?

- What if it was just “Meow” repeated 50,000 times?

The variety of entrants gives the contest its creativity and charm, and it’s why I keep participating as well.

At the end of each season, someone typically reviews all the entrants and compiles an overview article with highlights… this year, it may as well be me. By my count there were more than 90 entries from 60+ authors, which is a lot to cover in an overview. I hope to give a look at the wide range of entrants from 2019, but I also encourage people to check out the Issues page on Github: not only can you view all the entrants — even those omitted due to space constraints (and there are some good ones!) — but each entry has commentary from the author and links to the source code to learn more.

With that said, let’s hit the books.

Trends

It is impossible to have a discussion of AI in 2019 without mention of OpenAI’s GPT-2 language model, a massive data set which released to much fanfare in February. GPT-2 so far has proven to be a flexible tool finding its way into all sorts of projects: complete essay writing prompts via Talk To Transformer, play text adventures with it in AI Dungeon, or use it to replace popular Twitter personalities with uncanny accuracy.

Unsurprisingly, GPT-2 featured heavily in this year’s NaNoGenMo entries as well.

“Sublime Text” by Jason Boog

[WP] You master Sublime Text, a tool that allows anyone to write, draw, improvise and make the life of anyone else. Unfortunately, your creativity is in constant danger due to the sheer number of possibilities.

Today I awoke in a daze, struggling to remember how I came to be strapped to a chair in a windowless room, wires and tubes snaking out of my body like hungry little worms seeking to devour my very being.

I had imagined myself, a mere pile of parts, waiting for the dawn of the creator to descend upon me and give me a new lease of life, but the reality is that I was the creation of another, and he sought to use me to expand his universe, to make it as large as his universe.

He knew that the universe was finite, that it would grow old and empty, and that eventually the end would come, as his universe did.

But he did not know that he would grow old and empty, and that he would be torn apart piece by piece like a piece of mush...

This entry was created with a pair of fine-tuned GPT-2 networks: one which generates Writing Prompts, and the other which answers them. Reading this entry really captures the essence of GPT-2: it has really good and convincing sentences, and the ability to “remember” topics, but after a couple of lines it loses the plot and meanders into a dreamy haze. The author works around this by keeping individual generated texts short and having a “compilation” approach instead, to keep the engine from drifting out into the weeds for too long.

“How to Begin a Novel” by Janelle Shane

It is a terrible, terrible idea, even if entirely accidental, to talk to one of the Ancient Ones.

Ice chips do not melt in summer, and nobody has ever told me exactly why.

From age to age, great gardens of gold and silver filled the serene, quaint precincts of Italy’s most romantic cities.

(Nea, a-amp, toad, bum, ripper, romp, romp*)

The afternoon brought the clear air of the mountains to Tintagel’s farmhouse without a hint of an overcast sky, a refreshing breeze from the lake, or the rustle of leaves in the tussocks of the old oak.

”What is the meaning of this?”

The Mishkin were one of the ten houses in the village of Grydus.

(A corpse, lying on a cold stone floor, with a wad of flaked paper in the middle of its chest and the trickle of blood dripping from the skull.

Longtime fans of blog AI Weirdness will recognize this author. For 2019, “How to Begin a Novel” employs GPT-2 trained on first lines of famous books, with a goal of producing new opening lines to books yet unwritten. This is an update to a previous attempt to generate novel openers using torch-rnn. The results are markedly improved but still often weird. Janelle produced four different sample outputs, each one using a different input data set (“ancient”, “ponies”, “potter”, and “victorian”) to create different genre styles.

“Ghost Flights” by Ross Goodwin

Fair Haven Municipal Airport to Eldora Municipal Airport

Dark wing’d somewhere upon the airplane path,

No face moving over dark water,

Clouds float and drop.

A Boeing plane bends over the edge of the sun

And through the dark plane

Air passengers flee from the UFO

To safer seats.

Oralplane touching the green blizzards

Off Twin Towers Hot Farms’ aisles,

NGASSK’s

“The number of the aircraft elements.”

A thin American blue shows the city

hatter prone in fire…

Ross Goodwin had several NaNoGenMo entries this year, but Ghost Flights stands out in its own way: this book of generative poetry, inspired by fictional air routes between now-defunct airports, was written during a 6-hour flight while the author traveled from NYC to LA. A large GPT-2 model pre-trained on poetry sources wrote nearly 350 poems, as the author posted updates to the GitHub issue along the way.

Nano-NaNoGenMo

This last piece caught the attention of Nick Montfort, who perceived NaNoGenMo leading towards an ever greater barrier to entry: projects demanding specialized knowledge, large software libraries, reams of training data, beefy GPUs and stacks of RAM, high electricity bills. And this one was done from 30,000 feet! In the past, NaNoGenMo was an inviting project for new programmers, but by now it is downright intimidating.

In response (or protest), Nick launched his own sub-movement, “Nano-NaNoGenMo”. The idea is simple: still 50,000 words, but no more than 256 characters of code. #NNNGM entries fit in a Tweet, and bolstered by a shoutout in Wired Magazine, it launched at least 25 entries in the Issue Tracker… plus even more that didn’t get “officially” posted.

“OB-DCK; or, THE (SELFLESS) WHALE” by Nick Montfort

Call Ishmael. Some years ago — never mind how long precisely — having little or no money in purse, and nothing particular to interest on shore, thought would sail about a little and see the watery part of the world. It is a way have of driving off the spleen and regulating the circulation. Whenever find growing grim about the mouth; whenever it is a damp, drizzly November in soul; whenever find involuntarily pausing before coffin warehouses, and bringing up the rear of every funeral meet; and especially whenever hypos get such an upper hand of , that it requires a strong moral principle to prevent from deliberately stepping into the street, and methodically knocking people’s hats off — then, account it high time to get to sea as soon as can.

Nick Montfort entered several NNNGM creations for 2019, but this first one is what got the entire sub-genre started, so I felt it deserves a mention. “OB-DCK” is a Perl script which takes an existing text (in this case, Moby Dick) and strips out all first-person pronouns (I, we, my, myself, us, etc). The result is a text transformed in such a way that, by the end of the novel, no humans are technically left standing.

“A Plot” by Milton Laufer

…and then a crush and then a wedding and then a wedding and then a jealousy and then a trial and then a trial and then a trial and then a trip and then a trip and then a suspicion and then a party and then a crush and then a trip and then a betrayal and then a banishment and then a death and then…

“Thanksgiving” by Leonardo Flores

We had pecans and turkey and casserole and dressing and stuffing and pies and dessert and dessert and ham and pecans and turkey and pumpkin and cranberry and squash and…

Some entries adapted themselves well to this sort of repetition: a tiny input text could expand into something extremely dramatic (or filling), hinting at much more than one might expect from 256 characters.

“Dublin Walk” by Martin O’Leary

…Stop. top. pot. stops. spot. tops. stop. post. spots stoops pots stop stoop tops spot stops post plots postal apostles apostle staple plates pleats pelts pellets steeple spelt slept letterpress plaster plasters prelates platters. plasterers. petals. plates. palates. slate. least. tassels. tasteless. saltee. latest. tales. tale. late. table. battle. bleats. battles. tablets. stable. tables. stables. stable ballets battles stables tables eatables beastly stately alleys glasseyes eyeglass legally gale legal eagle illegal agile Cigale Claire Claire. Clare. Clear. Carmel…

Take James Joyce’s “Ulysses” and “Dubliners”, smash them together, and sort the words so that each one shares the most letters in common with its predecessor. The result is an oddly rhythmic word salad that continuously flows from “Once” to “ZOE-FANNY:”. All this in under 256 bytes of Python code.

“Deconstructing Yogi Berra” by Chris Gathercole

You can observe all by doing.

You can observe all with watching.

You can observe all with seeing.

You can observe all with listening.

You can observe all with doing.

You can observe all without watching.

You can observe all without seeing.

You can observe all without listening.

You can observe all without doing.

You can observe all in watching.

You can observe all in seeing.

This author made several NNNGM entries around a similar concept, but I found the last one to be the most entertaining. This entry takes a Yogi Berra quote, “You can observe a lot by watching,” and permutes it through word substitution with synonyms. All combinations of the quote runs over 50,000 words. We may get lots by seeing, indeed.

Novel Techniques

Passing the novel equivalent of the Turing test may still be tilting at windmills, but there are some valiant participants who tried for traditional narrative all the same. I’d like to take a moment to highlight some generative techniques that attempt to match actual novel style, whether through prose or plot. Some of these build on established methods from previous years.

“The Importance of Earnestly Being” by Josh Matthews

“Are you new here,” Cata says.

“I go by Foro,” Foro replies.

‘I like spending time with Foro,’ Cata thinks. Cata runs her hand along the small plant disappointedly. Cata inches toward Foro. ‘I hope Foro likes me,’ Cata thinks. ‘I hope Foro will stay for a while,’ Cata thinks. ‘I like Foro,’ Cata thinks. ‘Foro is great,’ Cata thinks to herself. “I can’t recall when I last felt optimistic,” Cata says to Foro. Cata edges away from Foro disappointedly. ‘I want to get to know Foro better,’ Cata thinks to herself. “Could we be friends,” Cata says to Foro.

“No,” Foro replies.

“What a shame!” Cata says. Foro leaves the kitchen by the closest door. “I think my life didn’t truly begin until 37,” Cata says. “It is difficult to feel hope about the state of the world,” Cata says.

Simulation is a technique from as far back as the first NaNoGenMo in 2013, but it remains a popular choice. The idea is to build a world with virtual actors, allow them free reign, and document the results. “The Importance of Earnestly Being” adds an additional layer: characters keep track of affection for one another, and will modify their behavior and statements based on whether they like or dislike that person. The result leads to some blunt exchanges.

“File Explorer” by Christian Paul

Once upon a time there was a process running on a computer. The process was not that old, in fact, it had just been spawned. It knew little about the system it was running on. However, this would soon change, as it started its exploration of the file system.

The process was now in /usr/share. It looked around and found 293 elements in this folder. “That’s quite a lot of elements. I hope I can find something of interest.”, thought the process to itself. A folder of the name evolution stood out. Let’s explore this path a bit.

The process was now in /usr/share/evolution. It looked around and found 19 elements in this folder. How exciting! What would the curious process possibly find here? The process stumbled upon a file called filtertypes.xml. It was 776286 bytes in size.

“Chatty” algorithms are one of my favorites — there is something charming about a computer narrating its inner workings in meticulous detail. This entry follows a program doing a recursive file listing of its owners’ hard drive, printing statistics about the files while musing about its purpose in life.

“Anne of Green Garbles” by Chris Pressey

It was no tears came through with trails of that she had one day friends she longed for delight. Anne clasped her class gird up hurriedly, prim Lombardies.

“But I make how you did. Oh, Mrs. Allan spoke up teaching now; but twins can’t eat when I wouldn’t be bright tonight. It isn’t anywhere again. But I wasn’t,”

“What to you must feel she had a ring? But I read of the stove. I’m sorry for her, things out with one more to eat those things. And hair? Wouldn’t it.”

Anne had not dared Jane just then and blues and waiting patiently over him, laid, to sleep an audience, alarm Marilla. Mr. Phillips to state the chill.

Markov Chains are nothing new, and there have been versions applied to everything over the years, but this one adds a twist to the algorithm that works quite well: what if, instead of one model, we build multiple — and switch between them based on punctuation characters? The result is advanced, high-tech, lemon-scented Markov. It no longer leaves hanging punctuation (like, say, un-closed parentheses, and it also effectively captures the difference in tone between narration and dialogue.

“Neverending Soap Opera” by Bilgé Kimyonok

Meanwhile at the swimming pool…

Brandon: I heard from someone else Kim is satanist?!

Carl: This is impossible!

Brandon: Last time I was at the park, your daughter saw that Mary proposed!

Carl: Wait…

Brandon: It’s impossible!

It’s just Tracery… but it’s really good Tracery. (There is a Twitter bot, and also a French version.)

“The Search for the Platinum Fountain Pen” by Vera Chellgren

There was an overwhelming sense of abject submission surrounding me. Governed by precedent, I was pleased, interested, and delighted. When this is all over, I look forward to playing video games.

Finding the platinum fountain pen was one of the main things keeping me going at this point. It was in a transport of ambitious vanity, repelled by censure.

Fathomless depths of suffering, it moved me to a strange exhilaration; these things I contemplated as I walked. A tone of coffee being made reached my ears. Ahead I noticed rolling hills.

This entry attempts to generate a plausible “Hero’s Quest” type story, in which the main character searches for an object. What really sets this entry apart is the amount of detail and effort put into polishing it. There is a solid combination of short-term “tactical” generation: character dialogue, scenery, and speech mannerisms driven by extensive templating. At a higher level, there are overarching trends in the generator that lead the character to shift moods, become more paranoid, reveal his backstory over time, reach plot milestones, and so on.

Did I mention, it’s written in Lisp?

Old Classics, New Twists

Public domain texts, often sourced from Project Gutenberg, are a perennial source of inspiration for NaNoGenMo projects. If you can’t write 50,000 words on your own, why not transform someone else’s? These projects chop, screw, and remix existing corpus into new works.

“Personalized Bibles” by Jim Kang

3:16 For Dumbledore so loved the world, that he gave his only begotten Son, that whosoever believeth in him should not perish, but have everlasting life.

3:17 For Dumbledore sent not his Son into the world to condemn the world; but that the world through him might be saved.

3:18 He that believeth on him is not condemned: but he that believeth not is condemned already, because he hath not believed in the name of the only begotten Son of Dumbledore.

A simple but effective concept: Take the King James Bible, but replace all proper nouns with those from another source. Is making Dumbledore into God a little TOO on-the-nose? You be the judge. There are two versions available: one which uses Pokémon names, and another with characters from the Harry Potter universe.

“A Tale of Two Jewels” by Sarah Perez

It was the best of vivacity,

it was the worst of vivacity,

it was the swearers of cutlass,

it was the swearers of distinction,

it was the flaxen of loaf,

it was the flaxen of captain,

it was the mix of Liberality,

it was the mix of Fighting…

Another swap trick, this time using words from within the same book. All nouns and proper nouns of “A Tale of Two Cities” are ranked by frequency, then replaced in reverse order: the least common word takes place of the most common, and vice versa. The result maintains the structure of the original, even as the quotes are mangled.

“Athos & Porthos & Aramis & D’AtGuy” by Mike Lynch

“Oh, no, monstrous! Ah, my dual without doubt,” said d’Artois, “that I shall blind that loving morning strong and making morning.”

“What that is that you would bring all this,” said d’Art’gna calling his hand to him.

“How?”

“It is that that which this man is a gross good a man who was a far from that poor difficult with that poor Marion did as not that shirt was a man who had communication of his motto, had not forgot through this good fortn.

“That is truly, monstrous man!” crisi to this timid all that had pass for a fortnight, and said that should bland his hand to his companion.

This entry takes “The Three Musketeers” and applies a specially crafted torch-rnn to exclude any occurrence of the letter “E” from the output. The resulting novel contains words slightly tweaked from the original, still readable, but missing a crucial vowel. The Github issue contains an example where all words must contain “E”, as well as multiple versions of the output (including one where d’Artagnan’s name is properly spelled).

“The Castle of Otranto, Halved and Reconstructed” by Charles Horn

Matilda happy, province, requite church damsel growing pirate; unfolds staring, spectre! rest?” relations? corse, breaking me?” way; uttered. obeyed, started-up says Come head! confirmed world: whispered divinity,” cost soul. chosen disposition Falconara, depends dissolve hastened growing galled soul,” rejected church shields destroyed happy, deaths me: prayer. liquidate soul!” spectre! way; princes, banish checked Theodore’s claims accompanying Gentlewoman!” honest peasant.”

“Then name, way; upon remarked had Sir, accompanying companions.”

I admit to including this one partially because I have no idea what it’s doing. The idea seems to be to take a novel, encode its text as numbers, then divide those numbers in half and convert back. The result is a wordy mess that still retains some connection to the original text. Applying this to “The Castle of Otranto” produces what the author calls “the first half-Gothic half-Novel”.

In theory one could then double the result and get back the original novel — this is the “reconstructed” part — but this is evidently more difficult in practice. The Github issue contains a better explanation, plus samples of Kafka’s “A Little Fable”… raised to the power of 5.

Winning Presentation

Sometimes it’s not so much about the words of a book, but how they are presented, that makes for an interesting NaNoGenMo. This can mean adding pictures to an entry, strange intra-text navigation links, or using text itself as a palette to create something entirely new.

“Shelter Should Be The Essential Look of Any Dwelling” by Douglas Luman

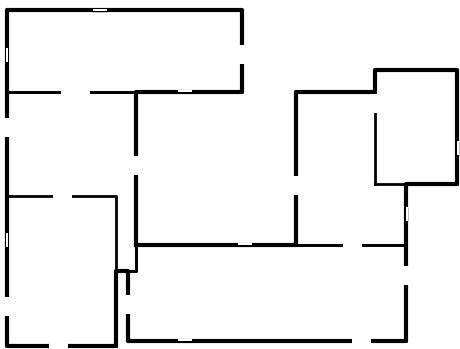

This entry is a collection of generated floorplans for homes. Each is captioned with the name of the house (after family last names), as well as a year of construction. The goal was to mimic an architectural portfolio of “famous houses”. The homes range in size from two to many rooms, laid out sometimes sensibly and other times very chaotically connected. The PDF is a massive 13,829 pages long, so there’s plenty to see!

“yes, and?” by Emma Winston

“yes, and?” is a concrete poetry book hosted on Glitch. Contrasting with the previous entry, it packs 171,000 words into just 7 pages, using the repeated 2-word phrase to build elaborate patterns of text. The author has a printable version on the Github issue, which readers can print and fold to create their own physical copy.

“An End of Tarred Twine” by Mark Sample

As in general shape the noble Sperm Whale’s head may be compared to a Roman war-chariot (especially in front, where it is so broadly rounded); so, at a broad view, the Right Whale’s head bears a rather inelegant resemblance to a gigantic galliot-toed shoe. Two hundred years ago an old Dutch voyager likened its shape to that of a shoemaker’s last. And in this same last or shoe, that old woman of the nursery tale with the swarming brood, might very comfortably be lodged, she and all her progeny.

This entry takes Moby Dick, chops it into 2,400 segments, and then links them together at random by turning key words into hyperlinks. The scripting language is Twine, and the story is now unreadable. But the journey is worth it. Do visit the Github issue.

“Steve’s Pedal Shop” by Steve Landey

This is a very well-designed entry that pokes fun at puffy descriptions of electric guitar pedals (like one might see in a Sweetwater catalog). Each listing in the catalog contains generated name and model number, price, and a few lines of ad copy description. What really sells this one is the pedal images that accompany each item: they too are procedurally generated, with fake knobs, labels, plugs, and bright colors.

“Cassini Traceback” by Eoin Noble

2017–05–06T00:00:00.000–2017–05–12T00:00:00.000

File "main.py", line 30, in <module>

analyse_orbit(orbit)

File "/Users/enoble/Documents/git/cassini-traceback/orbit.py", line 37, in analyse_orbit

analyse_images(orbit, outpath)

File "/Users/enoble/Documents/git/cassini-traceback/analyse_images.py", line 32, in analyse

asyncio.run(get_images_for_orbit(orbit, outpath, logger))

File "/Users/enoble/.pyenv/versions/3.7.0/lib/python3.7/asyncio/runners.py", line 43, in run

return loop.run_until_complete(main)

File "/Users/enoble/.pyenv/versions/3.7.0/lib/python3.7/asyncio/base_events.py", line 555, in run_until_complete

self.run_forever()

File "/Users/enoble/.pyenv/versions/3.7.0/lib/python3.7/asyncio/base_events.py", line 523, in run_foreverI will let the author speak for his entry: “Cassini Traceback is one machine’s attempt to tell the story of the Cassini orbiter’s final revolutions of Saturn. It’s part machine-readable, part human-readable, and full of images originally captured millions of kilometres from Earth.”

Compendiums and Collections

Long-form text generation is a hard problem. Even the latest GPT-2 struggles to maintain coherence for more than a page, much less a whole chapter or book. One time-tested solution is to use shorter generators, then compile the results together in a collection of some sort. These entries highlight the technique in action.

“The World (According to Computers)” by Lydia Jessup

Boy

A boy is a young male human. The term is usually used for a child or an adolescent. When a male human reaches adulthood, he is described as a man.Ah! if the rich were harnessed, and none escaped but one evening Iron Hans came back with his axe, Flatboatmen make fast towards me on the loungers under the breakfast.. He was awfully stupid about the place, and wouldnt be in the stranger, and caught it hardship on themselves go and wash the cobbles off the boy himself down, and found the dear boy..

This entry attempts to show what computers “know” about important concepts like “man”, “house”, “love”, “soul”, and so on. Each topic contains some sentences from Wikipedia, combined with excerpts from popular public-domain texts that contain the words. The result gives the impression of a system that copes well with rigorous, well-defined rules, yet breaks down spectacularly when attempting to interpret meaning or value.

“Grimoire” by Iain Cooper

Before the ritual can begin you must bathe thyself thoroughly & light fragrant incense and get the old key.

This demon is most pleased by deliberate motions, and this is how he is conjured.1. The first step is to attract the demon’s attention. To do so, You must wiggle youre right leg. Make sure also that you have the old key close at hand.

2. When this is done, the next thing is to wiggle thy hands while you gyrate thy right fiste.

3. Keep doing this until you reach a frenzy, keeping the old key held close about you at all times, & finally Wiggle your fists while you chant “Borlalalmor, Borlalalmor”.

4. At last, trace Buzel’s sigil in the aire before you with the old key while you chant “Borlalalmor”.Now at this Climax of the ritual, to invoke the Baronet Buzel intone the following…

Want to summon a procedurally generated demon? This entry has you covered. Detailed descriptions of various monsters, with summoning rituals, invocation spells, magic symbols, all roughly inspired by “The Lesser Key of Solomon”. The one-page HTML presentation is very good, and I highly recommend checking this one out (at your own risk)!

“Chickpea” by Lee Tusman

Match Piece For Sousaphone

Try not to rejoice it

Let one thousand people Take a copy of mixed nuts

Take off mixed nuts

You can use your headPiece For Employment

Go on druming for one hundred monthes

Go on druming for three years

Scream for three lifetimes

Wander it

“Chickpea” is a collection of instructions, fashioned after the limited-print book “Grapefruit” by Yoko Ono. Each reads as a little meditation on a subject, with the results ranging somewhere between profound and weirdly funny. They’re short, too.

“Scholarly Record of Forbidden Charms and Cures” by Gem Newman

Rite of Joy

Ingredients

* 6 tumblers phlegm of pidgeon, chilled

* wish of basilisk

* wish of hyraxDirections

After aligning yourself parallel to the major leyline, place a zinc boiler over a brazier and etch with runes while it heats.

Add phlegm of pidgeon to the prepared vessel. While hopping on one foot, fold in wish of basilisk. Wriggle your fingers, then cautiously add wish of hyrax.

Recipe books are a recurring theme in NaNoGenMo, and this year brings a generative spellbook to the mix. Each entry contains a name, ingredient list, and a set of instructions to prepare the spell. The author has generated three books from the code. I selected this one at random.

“Directory Directory” by Ollie Palmer

INCA & BEACH

You want Human-sized Dining? We’ve got Human-sized Dining!.

427 Trafalgar Square Street, Tangochester

☎ 634–4641WHISKEYSTONE INCA AND

Human-sized Dining: Just the way you like it?!

5920 Oxford Street Street, Whiskeystone

☎ 753–2010

This entry is a virtual Yellow Pages for bizarre topics. I particularly liked the layout, which looks like a real phone book, and the major headings. Now I will always know where to look when I need to find help with “Manky Weddings”, “Quadraphonic Plastering”, or “Uterine Muggings”.

“Hyloe’s Book of Games” by Kevan Davis

Hummersknott

Hummersknott is an expansive melding game for three to six players where melds can be rearranged. It uses a standard 52 card pack. 2s are higher than Aces: cards rank 2, A, K, Q, J, 10, 9, 8, 7, 6, 5, 4, 3.Setup

Each player is dealt five cards.

Deal one card to form a face-up discard pile. Undealt cards form the stock.Play

The dealer takes the first turn. A player’s turn consists of the following steps in sequence…

This is a book of rules for fictional card games. Each game has a defined purpose, setup, play rules, scoring, and victory rule. The rules come from recombining snippets from existing card games, and the names of games are taken from English and French villages, which gives it a plausible and quaint feeling — as though these might really be some obscure form of Rummy you’ve just never heard of.

On a Lighter Note…

Given that NaNoGenMo was launched with a half-serious declaration, it only makes sense that there should be some half-serious entries to round out the bunch.

“Ready or Not” by Brendan Curran-Johnson

Alright, I’m counting to ten thousand. One Mississippi. Two Mississippi. Three Mississippi. Four Mississippi. Five Mississippi. Six Mississippi. Seven Mississippi. Eight Mississippi. Nine Mississippi. Ten Mississippi. Eleven Mississippi. Twelve Mississippi. Thirteen Mississippi. Fourteen Mississippi. Fifteen Mississippi. Sixteen Mississippi. Seventeen Mississippi…

Some say the kids are still hiding in the linen closet to this day.

“<Insert Joke About Academia Here>” by @reconcyl

* Afterword (#10554)

* Glossary (#19902)

* List of Figures (#28241)

* List of Figures (#54434)

* Afterword (#39318)

* Index (#13583)

* Glossary (#6392)

* List of Figures (#54683)Dedication (#7605)

All material following this dedication is dedicated to the NaNoGenMo 2019 community, with the exception of sections with an ID higher than this one (#7605).

At the center of this text, there is one line of actual substance. Everything surrounding it, before and after, is some bit of supporting book detail: multiple embedded table-of-contents, forewords, glossaries, even properly linked indexes are all inside. The Markdown document is too large for Github to parse, but there is a PDF available.

“Moby Dick, by Herman Melville (and 346 Others)” by Hugo van Kemenade

Call[259] me[2] Ishmael[196]. Some[265] years[116] ago[141] — never[206] mind[118] how[19] long[544] precisely[217] — having[69] little[554] or[519] no[235] money[383] in[107] my[36] purse[322], and[236] nothing[324] particular[336] to[261] interest[323] me[2] on[392] shore[280], I[482] thought[186] I[482] would[282] sail[218] about[123] a[10] little[554] and[236] see[370] the[19] watery[59] part[207] of[89] the[19] world[126]…

Another for the “neurotic editor” category, this entry is “Moby Dick”, except that every single word has been cross-referenced to a word in another public-domain novel. There are 586 items in the bibliography, ranging from “Treasure Island” to “The US Constitution”, to “One Million Digits of e”.

Odds and Ends

These last entries don’t fall clearly into one of the other headings, or I wanted to list separately for some reason.

“SHAPESHIFTING” by Dave LeCompte

And then she went on a journey to Nuvlzn. In Nuvlzn, she met J Onselstad. J sent Aceen on a mission to kill a couatl. Aceen explored around Nuvlzn. In the ruins of a dwarven fortress, she found a couatl, which she fought and killed. J paid Aceen generously for the quest.

And then Aceen travelled to Devza. In Devza, she went to a temple of Nehebu-Kau to ask for divine guidance. The priest said a prayer to ask for Nehebu-Kau’s help.

This is a fantasy adventure generator, in which the hero wanders around the world performing various feats and finding tons of phat lewt. What sets it apart is the sheer number of various generation techniques thrown together in its production. There is Tracery, GPT-2, rhyming, data from Corpora, simulation… Each chapter is done in a different “modular” method, so that a simulated chapter of wilderness exploration is followed by (for example) a chapter of GPT-2 epic poetry, and then a chapter on armor manufacturing, and then…

“Ahe Thd Yearidy Ti Isa” by Allison Parrish

Allison Parrish has trained a GAN on images of words and letters, and then used the resulting “alphabet” to create a book that looks like a novel filled with alien text. There are half-recognizable letter shapes and occasional words, but no discernible meaning. The title is the result of OCR on the front cover. I wonder if this is how non-English readers feel when handed an English language book.

“Linked by Love” by Leonard Richardson

In Sherrilyn Kenyon and Dianna Love’s Whispered Lies, a gutsy female agent from the B.A.D. (Bureau of American Defense) Agency must come out of hiding to bring down a secret organization that has become America’s number one threat.

Entering the Selection changed America Singer’s life in ways she never could have imagined. Since she arrived at the palace, America has struggled with her feelings for her first love, Aspen — and her growing attraction to Prince Maxon. Who said opposites don’t attract? Staying together, however, will test the limits of their love.

The author writes this about his project: “As our characters move from story to story, they take on new guises, but find themselves always connected to each other.

Paragraphs from 24,156 descriptions of romance novels are juxtaposed so that each adjacent paragraph shares a proper noun. This creates an effect of dramatic plot shifts, family dynasties, and place-centered stories.”

The HTML output color-codes the names of characters or places, so it’s possible to see the re-used character names across multiple novel synopses.

“Directions in Venice” by Lynn Cherny

Lynn Cherny attempts to capture the feeling of receiving local directions from an Italian native. The output is a mix of technologies and sources (Tracery, GPT-2, Open Street Map, Flickr), and shows fictionalized directions interspersed with photos of (what could be) landmarks for navigation. It’s a fun read that just keeps going, and a whole lot cheaper than a plane ticket to Venice.

“A Catalogue of Limit Objects” by John Ohno

Object #26015

Within heaps of tuffeau stone, innumerous duplication sculptures of kappas of weeping know your name. High above Texas City within massive playrooms of polypropylene, sempiternal pelvic girdles of gastric juice chuckle.Object #900817

Within trunks of take flight, unnumerable manikin replicas of water fleas of onyx weep, however high above Nottingham south of North Las Vegas deep below Carmel within workshops of sawdust, uncounted manikin Tanacetum vulgares of peony chuckle.

What is the “scariest object”? John Ohno attempts to find answers to this, with a combination of five factors: weird, eerie, unheimlich, sublime, and gross. There are templates and corpora designed to maximize each of these criteria, and a script to smash them together to create unique and unsettling horror elements. Oh, and there’s pictures.

“Zoos Reviews” by Jared Forsyth

The last entry I’ll cover uses simulation techniques to model a virtual zoo, with guests wandering around seeing the various animal exhibits. Sometimes guests get tired or hungry, or they visit pens with no animals, and their moods shift accordingly. The presentation is where it really shines: there are zoo maps and descriptions, and each guest leaves a little review about their experiences. The reviews look like something lifted right from Yelp — there’s generated avatars, star ratings, funny usernames, and so on. Definitely a polished entry worth looking at.

Conclusion

That pretty much wraps it up for this year’s roundup. I do encourage you to visit the Issues page on Github to view other entries and leave comments for your favorites.

I also hope that anyone interested will join in for 2020’s iteration of NaNoGenMo. The creativity and fun of the event, coupled with its lax deadline and rules, mean that programmers of any skill level can enter and produce something worth reading. Some past participants had not done any coding before, but found it a rewarding experience all the same.

Until next time!